Game engines were initially created to create fictional worlds for entertainment purposes. However, these same engines can be used to create digital twins, which are exact replicas of genuine worlds.

Researchers from Osaka University have discovered a way to train deep learning models that can quickly scan photos of real cities and precisely differentiate the buildings that appear in them using the images that were automatically created by digital city twins.

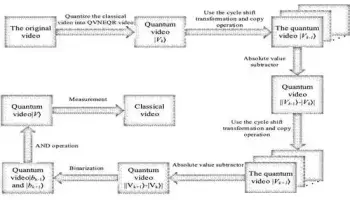

A deep learning neural network called a convolutional neural network is made for processing structured data sets like photographs. The way tasks like architectural segmentation are carried out has fundamentally changed as a result of recent deep-learning developments.

However, a deep convolutional neural network (DCNN) model requires a substantial amount of labeled training data, and classifying these data manually can be time consuming and incredibly expensive.

The researchers used a 3D city model from the PLATEAU platform, which has incredibly detailed 3D models of the majority of Japanese cities, to build the synthetic digital city twin data. They imported this model into the Unity game engine, where they put up a camera on a virtual automobile that drove about the city and captured virtual data photographs in a variety of lighting and weather scenarios.

These results reveal that our proposed synthetic dataset could potentially replace all the real images in the training set.

Tomohiro Fukuda

The Google Maps API was then used to obtain real street-level images of the same study area for the experiments.

The researchers discovered that using data from the digital city twins produces better outcomes than using only virtual data without any real-world counterparts. Furthermore, segmentation accuracy is increased when synthetic data is added to a real dataset.

Most notably, however, the researchers discovered that the segmentation accuracy of the DCNN is greatly improved when a specific percentage of real data is included in the digital city twin synthetic dataset. In fact, its performance becomes competitive with that of a DCNN trained on 100% real data.

“These results reveal that our proposed synthetic dataset could potentially replace all the real images in the training set,” says Tomohiro Fukuda, the corresponding author of the paper.

For construction management and architecture design, large-scale measurements for retrofits and energy analyses, and even seeing building facades that have been demolished, automatically breaking out the different building facades that appear in a picture is helpful. Multiple cities tested the system, proving the transferability of the suggested framework.

For the majority of contemporary architectural styles, the hybrid dataset of real and artificial data produces encouraging prediction results. Because manual data annotation is not required, it offers a potential method for training DCNNs for architectural segmentation tasks in the future.