Technological advancements have opened up new and exciting opportunities for both remote tourism and robotic system teleoperation. As a result, computer scientists have been able to create increasingly complex systems that allow humans to virtually visit remote areas in immersive ways.

Researchers from the Italian Institute of Technology (IIT) in Genoa recently unveiled a unique avatar-based system that enables a human operator to visually navigate and move around in a real-world environment remotely and in real-time, as if the operator were physically present. This technology, described in a pre-publication paper on arXiv and demonstrated in a YouTube video, is based on the humanoid robot iCub3, which was also developed at IIT. It was successfully used by the team to visit the Venice Biennale. The crew utilized it effectively to visit the Venice Biennale, a well-known art and architecture event in Venice, Italy.

“My lab, the Artificial and Mechanical Intelligence lab at IIT, has various research axes, one of which is what we term telexistence,” said Daniele Pucci, the project’s coordinator, to Tech Xplore. The fundamental purpose of this project is to use humanoid robots to create physical avatars for people. Because of a robotic (humanoid) body, this technology will allow us to live physically and operate efficiently in a faraway location.

The phrase “telexistence” refers to the ability of humans to feel as if they are somewhere else while still physically interacting with their surroundings as if they were actually in this faraway area. This is usually accomplished through the use of a combination of virtual reality (VR) technology and physical robots.

For several years, Pucci and his colleagues have been attempting to construct telexistence systems. Their current work builds on a study they conducted in 2017 and subsequent research efforts.

“We exhibited an early avatar system utilizing the iCub 2.0 in September 2018,” Pucci added. “The iCub 2.0, on the other hand, could not properly play the role of an avatar for humans due to its height (approximately one meter) and limited workspace in its arms, which did not allow the robot to be effective in an environment designed for adult people. For example, the robot struggled to reach goods on a table.

To build on their earlier system, Pucci and his colleagues began work on a new version of iCub, the iCub3, in 2019. It is 1.2m tall, has improved actuation, and incorporates more advanced sensors. Because of these traits, it is a better avatar for the team’s telexistence system than its predecessors.

“Our work also has three altruistic goals,” Pucci explained. For starters, the COVID-19 pandemic taught us that improved telepresence technologies may become critical very soon in a variety of industries, including healthcare and logistics. Second, avatars may enable people with severe physical limitations to work and complete duties in the real world through the use of robotic bodies. This could be the next step in the advancement of rehabilitation and prosthetic technologies. Finally, we may picture a future for tourism in which physical avatars are scattered throughout the world and we can access them from home and visit Tokyo, New York, or New Delhi remotely by wearing a headset and a few other wearable devices.

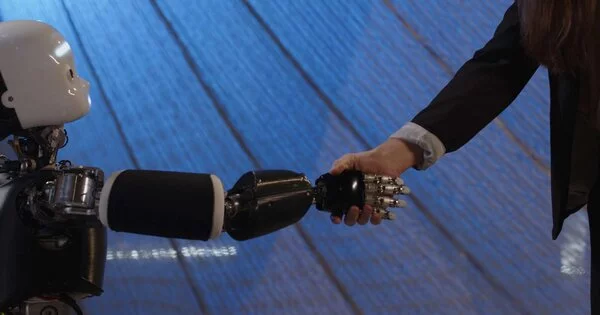

Pucci and his colleagues’ iCub3-based Avatar System digitally transports a human operator to a remote location, where he or she can move, grip, or manipulate things, speak, and create facial expressions. The system is made up of two major components: the operator technology and the avatar (iCub3).

“The iCub 2.0, however, could not play the role of an avatar for humans effectively, as its height (about one meter) and its arms’ limited workspace did not allow the robot to be effective in an environment made for adult humans. For instance, the robot had difficulties in reaching objects on a table.”

Pucci explained

The iCub3 robot, which is put in the location the user is virtually visiting, performs the user’s motions, phrases, and facial expressions. Furthermore, using a set of wearable devices incorporated with the operator technology, users receive continuous visual, aural, haptic, and touch-related feedback from the remote environment.

Pucci explained that the operator technology is made up of IIT-created devices as well as off-the-shelf goods that have been integrated into the system. The IIT gadgets, developed in the context of iFeel (a start-up project by our lab), encompass a collection of wearable technologies for tracking human motion and providing haptic feedback. We can use the iFeel technology to monitor the movements of the operator’s arms, for example, and then project them onto the avatar. Similarly, it enables us to simulate the feeling of touch: if someone embraces the avatar, motors simulate the human operator’s sense of touch. “

Aside from the wearable technology developed by the IIT offshoot, the system’s operator hardware incorporates a variety of off-the-shelf devices, such as headsets, haptic gloves, and treadmills. Pucci and his colleagues’ current study is focused on developing algorithms and software architectures that can efficiently combine data collected by both off-the-shelf products and iFeel devices, while also creating outputs for the iCub3 avatar.

The avatar, namely the iCub3 humanoid robot, is the second component of our system, Pucci explained. “The iCub3 robot is 25 cm taller than earlier iCub versions, making it a more suitable platform for interaction in a human setting. Its balance and locomotion are more robust, and it can better mimic human movements and physical engagement. “

Because it is larger than its predecessors, the iCub3 weighs 52 kg, which is approximately 20 kg more than the iCub2. Furthermore, the motors on its legs are more powerful than those in prior iCub robots, allowing the robot to move more quickly and efficiently.

Because it is no longer dependent on cable-driven joints, the iCub3 platform has distinct actuation mechanics, Pucci added. “It has an additional depth camera and force sensing than the current generation, which can sustain increased robot weight. Finally, the iCub3 features a larger capacity battery that is housed within the torso assembly rather than in a permanently attached backpack. “

The researchers utilized their avatar technology to remotely visit the Italian Pavilion at the 17th International Architecture Exhibition at the Venice Biennale to evaluate and illustrate its potential. They used a normal fiber optic connection to allow a user on their Genoa campus to go through the display in real time.

To the best of our knowledge, this is the first time an avatar system conveys the operator’s locomotion, manipulation, voice, and facial expressions to a legged humanoid avatar while getting visual, aural, haptic, and tactile feedback, Pucci explained. “Venice’s adoption of a system to facilitate remote tourism is also unique to us.”

In the future, avatar technology could allow users to virtually travel to towns or art shows all over the world. The system was also entered into the ANA Avatar XPRIZE, a competition for teams developing robotic avatars with a total prize pool of $10 million, and is one of the semifinalists. Pucci and his colleagues are currently trying to improve the platform for the competition’s final phases.

“Another future plan for our work is related to the so-called Metaverse,” Pucci stated. We feel that much of the technology and algorithms we created could be valuable for controlling and receiving information from the Metaverse’s digital avatars. As a result, we now intend to examine how to use our findings on this new and exciting path, with the hope that investors will join us on this trip.